Forbes Councils Member

COUNCIL POST

Click to read the full article published on Forbes.com.

Back in the 19th century, railroads revolutionized our world, connecting places, enabling transportation at scale and igniting a new wave of industrial growth. Today, we stand at the threshold of a similar transformation, as digital tracks are being laid across the United States. This digital infrastructure—a network of data centers, fiber optic cables, and cutting-edge hardware and software—is the modern equivalent of those transformative railroads, but with even greater potential.

This digital infrastructure is not just a supporting player in the upcoming AI revolution—it is the very foundation upon which our AI-driven future is being built. Just as the railroads of the past opened new frontiers and possibilities, today’s digital infrastructure is enabling unprecedented advancements and scale in artificial intelligence (AI) and its applications.

Digital Infrastructure And AI Adoption

The explosive growth of AI applications, particularly large language models like ChatGPT, has catapulted AI into the mainstream. OpenAI’s ChatGPT reached 100 million monthly active users just two months after its launch, making it the fastest-growing consumer application in history. To put this in perspective, ChatGPT’s user growth outpaced Instagram, which took two and a half years to reach 100 million users, and TikTok, which achieved the same milestone in about nine months, each holding the previous record for rapid adoption.

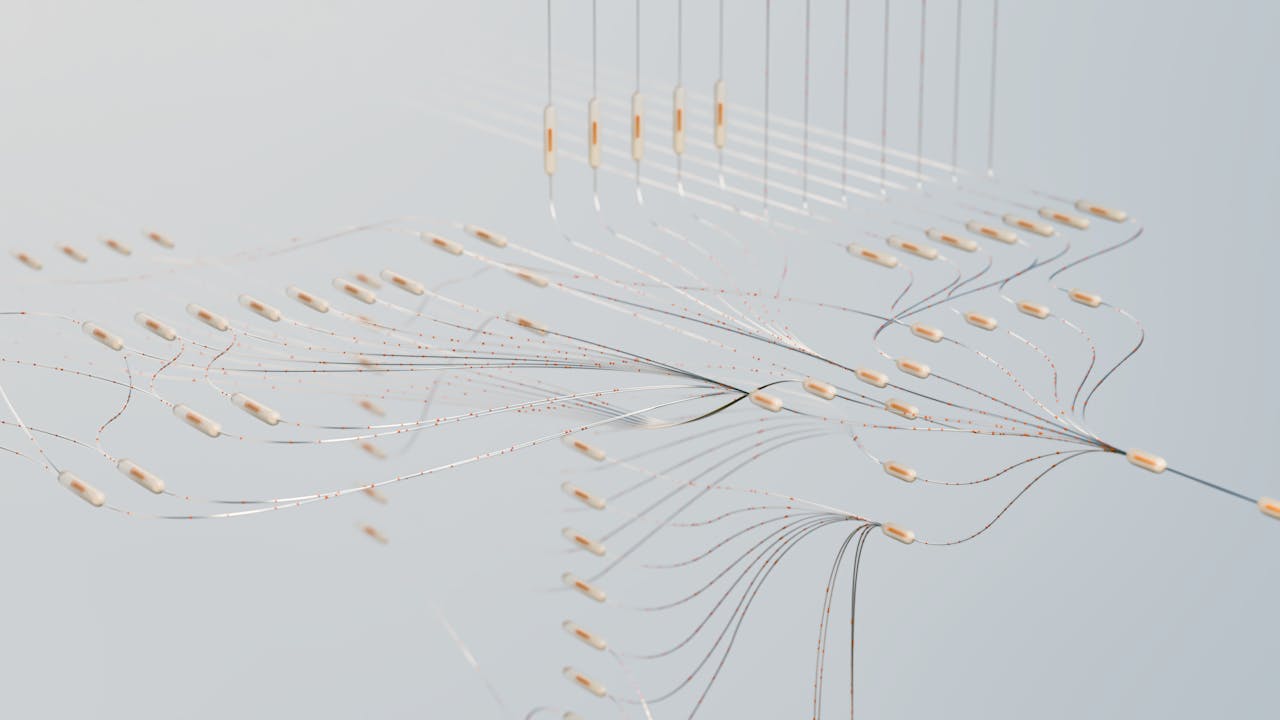

What users don’t see, however, is the vast infrastructure that powers these transformative applications. Behind every chat response or image generation lies a complex network of data centers housing hundreds of thousands of powerful computers. These facilities are the engine rooms of the AI revolution, processing enormous amounts of data and performing complex calculations at unprecedented speeds.

The energy demands of AI are staggering when viewed on a global scale. Training a single large language model like GPT-3 is estimated to consume about 1,287 megawatt hours (MWh) of electricity, equivalent to the annual energy usage of 130 average U.S. homes. One study estimates that by 2027, the AI sector could consume between 85 to 134 terawatt hours (TWh) annually. That is more than the annual power consumption of all but around 30 countries in the world.

This immense computational demand is why digital infrastructure has become so critical. Just as railroads needed extensive networks of tracks and stations, AI requires a vast ecosystem of data centers, networking equipment and specialized hardware. These digital rails are the conduits through which data analysis and compute flows, enabling the rapid processing and analysis that powers AI applications. Today’s AI data centers are the colossal engines powering our digital age. For example, Google’s data center in Council Bluffs, Iowa, spans nearly three million square feet—equivalent to about 50 football fields.

The AI Value Chain: From Silicon To Services

At the heart of the AI ecosystem is a value chain that begins with chip manufacturers like NVIDIA, whose GPUs have become the de facto standard for AI computation. NVIDIA’s data center revenue exploded to $22.6 billion in the first quarter of 2024, a 427% increase year over year. This exponential growth reflects the surging demand for AI-capable hardware and underscores the critical role that specialized chips play in the AI revolution.

GPUs, however, only represent the tip of the value chain iceberg. Between chip production and AI services lies a critical intermediary layer: the companies that house, operate and maintain these GPUs. These digital infrastructure companies play a crucial role in making AI accessible and are responsible for the day-to-day operations of the data centers that power AI applications. They manage everything from the physical security of the facilities to the intricate workflow of load balancing and resource allocation that keeps AI services running smoothly.

While the end users of AI applications may never see or interact with the infrastructure and data centers directly, they are the tracks that keep the digital trains running, ensuring that data flows smoothly and that computational resources are used efficiently.

The investments of OpenAI’s Sam Altman and others in energy production are a forward-thinking approach to one of the biggest challenges facing the AI industry: the enormous energy demands of large-scale AI computations. As AI models grow larger and more complex, their energy needs increase correspondingly. This has led to growing concerns about the environmental impact of AI and has spurred efforts to develop more energy-efficient AI hardware and to power data centers with carbon-free energy sources.

Building Towards The Future Of AI

As the AI revolution continues to unfold, we have a crucial role to play in ensuring its sustainable growth. Here are five actionable steps that we can take to contribute to energy efficiency and responsible AI development:

1. Invest in energy-efficient hardware.

Prioritize the development of energy-efficient AI hardware, including working closely with chip manufacturers to design more power-efficient GPUs or exploring alternative computing architectures like neuromorphic chips that mimic the energy efficiency of the human brain.

2. Optimize AI models for efficiency.

Encourage R&D efforts to create more efficient AI models that require less computational power without sacrificing performance, including techniques like model compression, knowledge distillation or the development of smaller, task-specific models instead of large, general-purpose ones.

3. Implement smart data center management.

Utilize AI itself to optimize data center operations. Machine learning algorithms can predict and manage workloads, adjust cooling systems in real time and optimize energy distribution, significantly reducing overall energy consumption.

4. Support research in carbon-free AI.

Allocate resources to support academic and industry research in carbon-free AI technologies, including funding research projects and partnering with universities to emphasize sustainability in AI development.

5. Develop sustainability standards.

Actively participate in industry collaborations to establish standards and best practices for energy efficiency in AI and digital infrastructure, including targets for reducing the carbon footprint of AI training and inference models or improving the power usage effectiveness (PUE) of data centers.

As we continue to lay the digital tracks for AI’s transformative growth, we will enable the immense impact of the AI revolution. However, its true potential can only be realized if we ensure its growth is sustainable and beneficial across all its stakeholders.